8. Sitzung

Response Times

Andrew Ellis

Neurowissenschaft Computerlab FS 22

2022-04-12

Next sessions

Evidence accumulation

Bayesian data analysis

Is the brain Bayesian?

1

What can we learn?

ADHD: Response Time Variability

Children with ADHD show cognitive impairments in attention, inhibitory control, and working memory compared with typically-developing children.

No specific cognitive impairment is universal across patients with ADHD. One of the more consistent findings in the ADHD neuropsychology literature is increased reaction time variability (RTV), also known as intra-individual variability, on computerized tasks.

Children with ADHD have also been shown to be less able to deactivate the DMN than controls, and this inability to deactivate the DMN has been directly related to RTV.

Does RTV reflect a single construct or process? The vast majority of studies have used a reaction time standard deviation (RTSD).

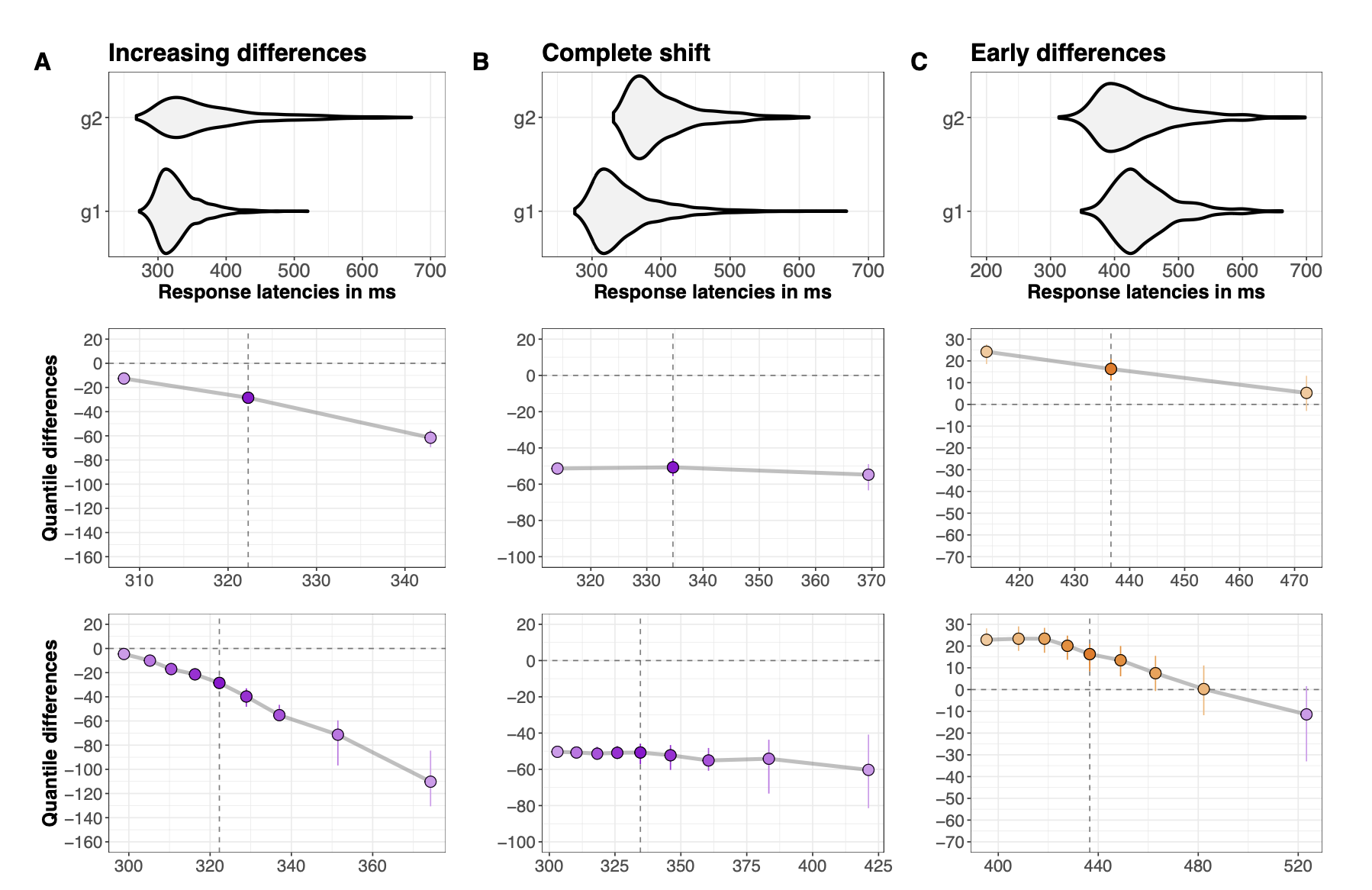

Facilitation

Stroop task vs Simon task

Facilitation effects in both task.

Stroop effects are smallest for fast responses and increase as responses slow.

Simon effects are largest for fast responses but decrease, and even reverse, as responses slow (see DEMO).

Pratte, M. S., Rouder, J. N., Morey, R. D., & Feng, C. (2010). Exploring the differences in distributional properties between Stroop and Simon effects using delta plots. Attention, Perception, & Psychophysics, 72(7), 2013–2025. https://doi.org/10.3758/APP.72.7.2013

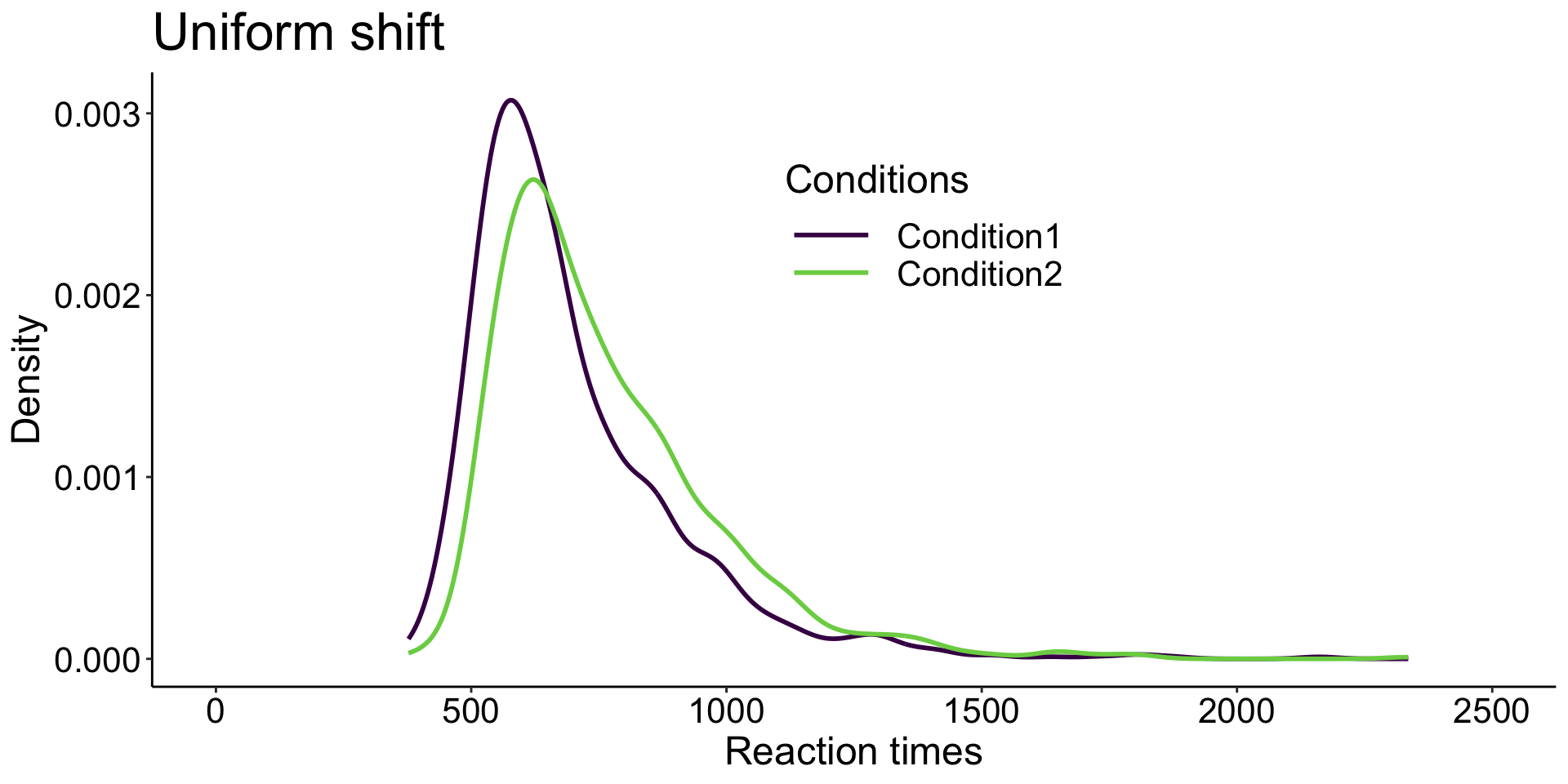

Experimental manipulations

affects most strongly slow behavioural responses, but with limited effects on fast responses.

affects all responses, fast and slow, similarly.

has stronger effects on fast responses, and weaker ones for slow responses.

Distribution analysis provides much stronger constraints on the underlying cognitive architecture than comparisons limited to e.g. mean or median reaction times across participants.

2

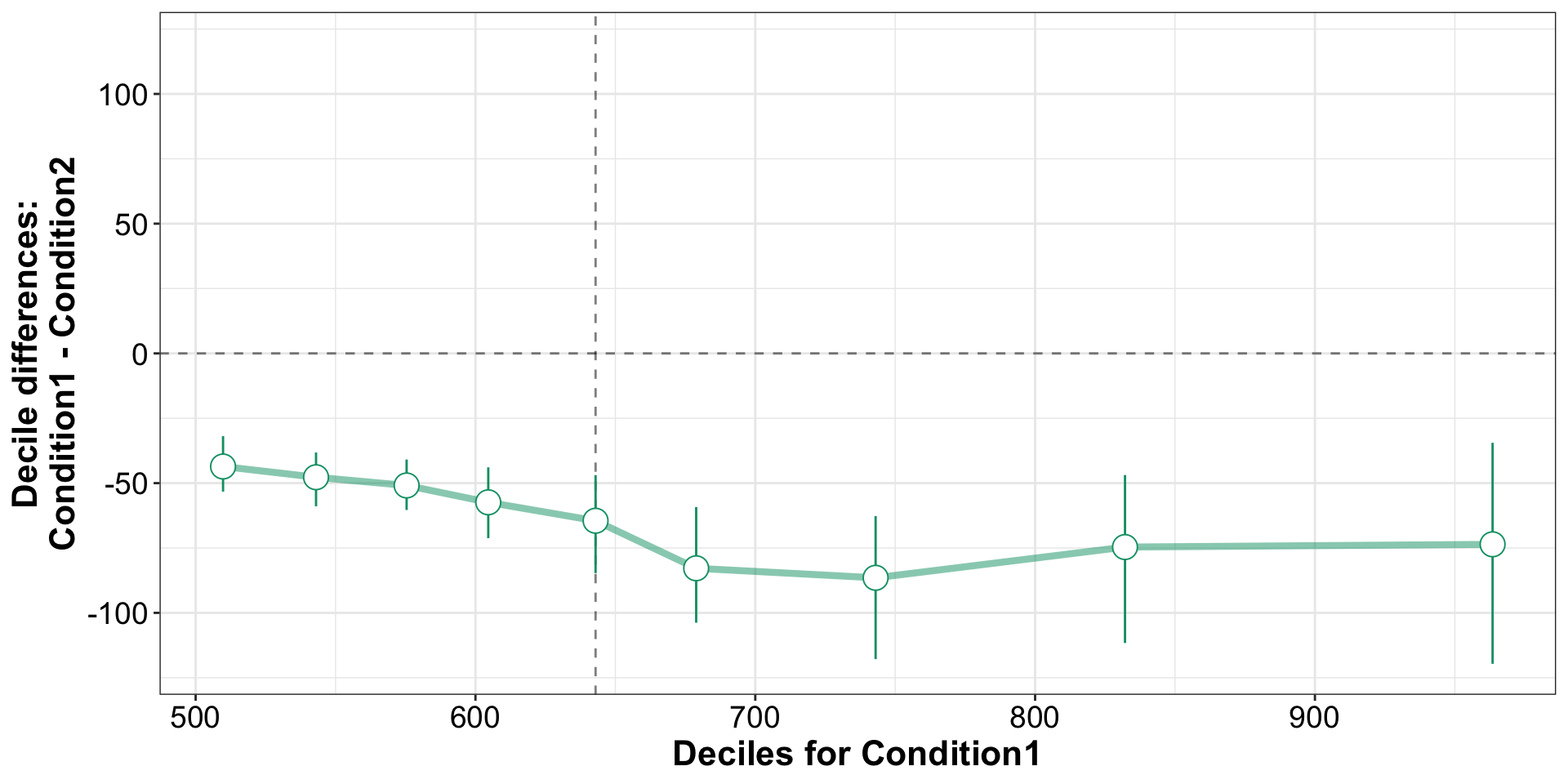

Shift Function

Shift Function: Independent Groups

3

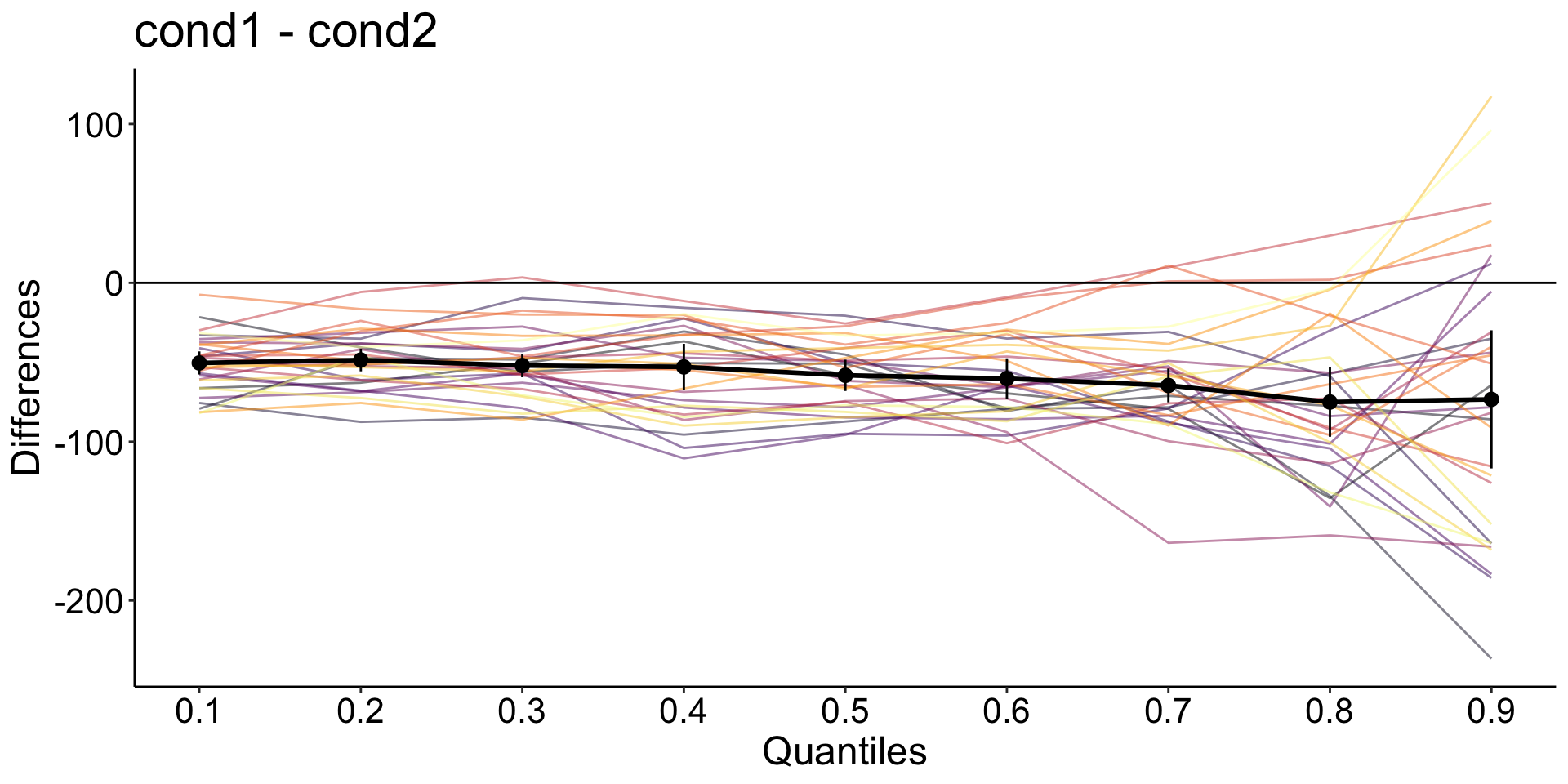

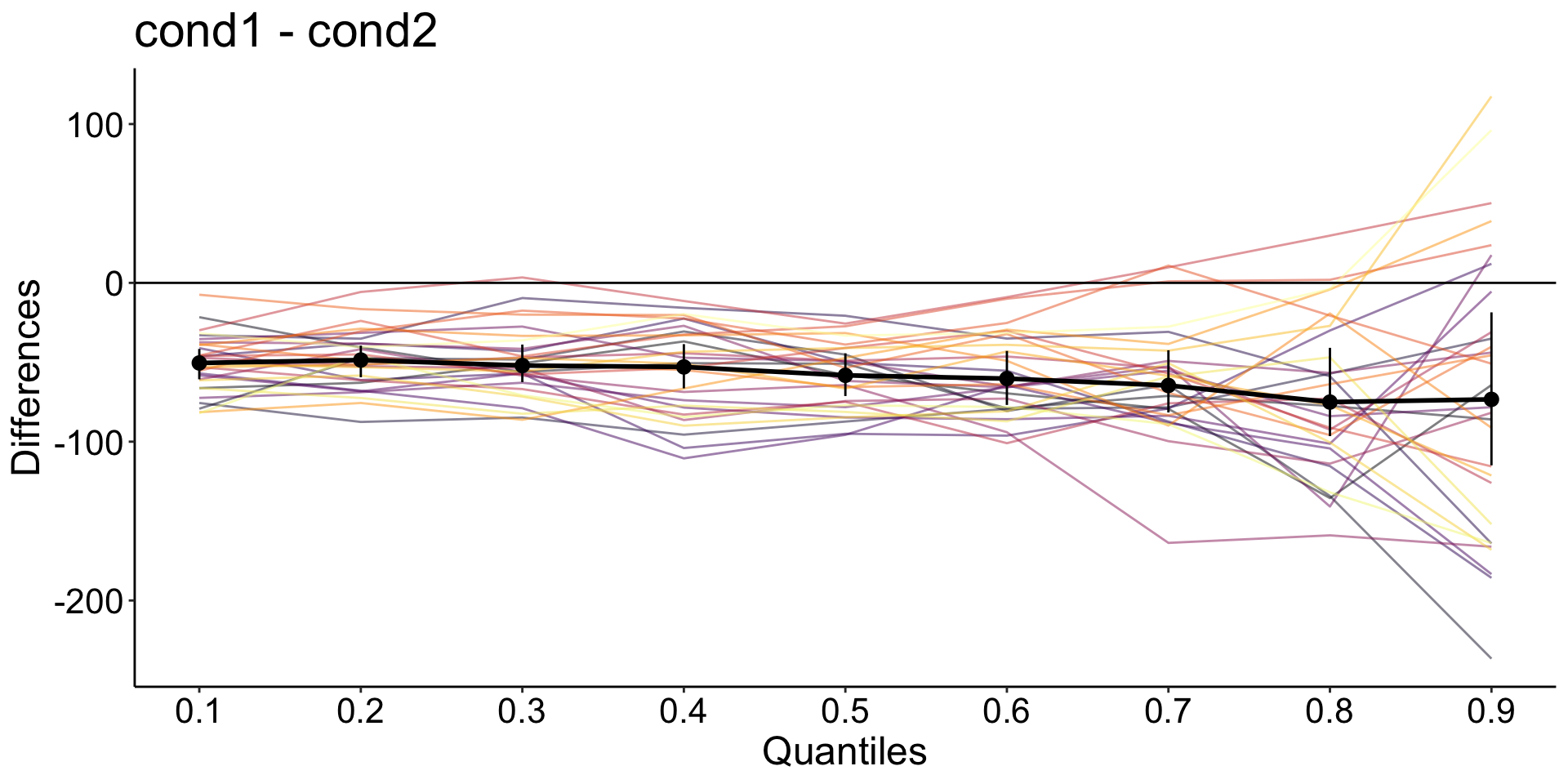

Hierarchical Shift Function

Hierarchical Shift Function

quantiles are computed for the distribution of measurements from each condition and each participant.

the quantiles are subtracted in each participant.

a trimmed mean is computed across participants for each quantile.

Confidence intervals are computed using the percentile bootstrap.

Hierarchical Shift Function

Participants with all quantile differences > 0

nq <- length(out$quantiles)

pdmt0 <- apply(out$individual_sf > 0, 2, sum)

print(paste0('In ',sum(pdmt0 == nq),' participants (',round(100 * sum(pdmt0 == nq) / np, digits = 1),'%), all quantile differences are more than to zero'))[1] "In 0 participants (0%), all quantile differences are more than to zero"Participants with all quantile differences < 0

Hierarchical Shift Function

Alternative bootstrapping method: hsf_pb()

4

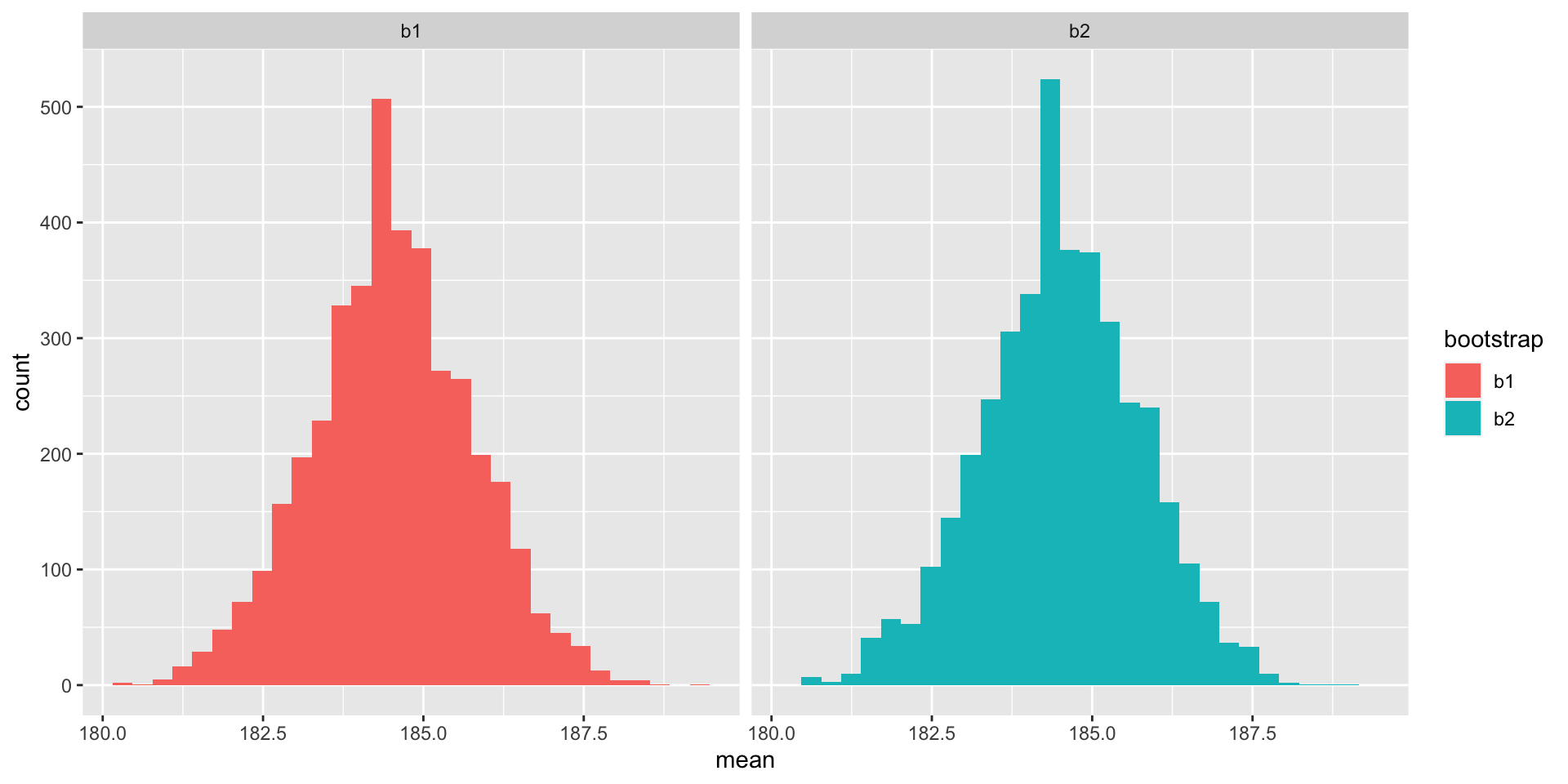

Bootstrapping

Bootstrapping

“The bootstrap is a computer-based method for assigning measures of accuracy to statistical estimates.” Efron & Tibshirani, An introduction to the bootstrap, 1993

“The central idea is that it may sometimes be better to draw conclusions about the characteristics of a population strictly from the sample at hand, rather than by making perhaps unrealistic assumptions about the population.” Mooney & Duval, Bootstrapping, 1993

Like all bootstrap methods, the percentile bootstrap relies on a simple & intuitive idea: instead of making assumptions about the underlying distributions from which our observations could have been sampled, we use the data themselves to estimate sampling distributions.

In turn, we can use these estimated sampling distributions to compute confidence intervals, estimate standard errors, estimate bias, and test hypotheses (Efron & Tibshirani, 1993; Mooney & Duval, 1993; Wilcox, 2012).

The core principle to estimate sampling distributions is resampling. The technique was developed & popularised by Brad Efron as the bootstrap.

Bootstrapping

Essentially, we are doing fake experiments using only the observations from our sample. And for each of these fake experiments, or bootstrap sample, we can compute any estimate of interest, for instance the median.

For a visualization, see this demo.

Bootstrapping

Bootstrapping

Bootstrapped standard error of the mean: